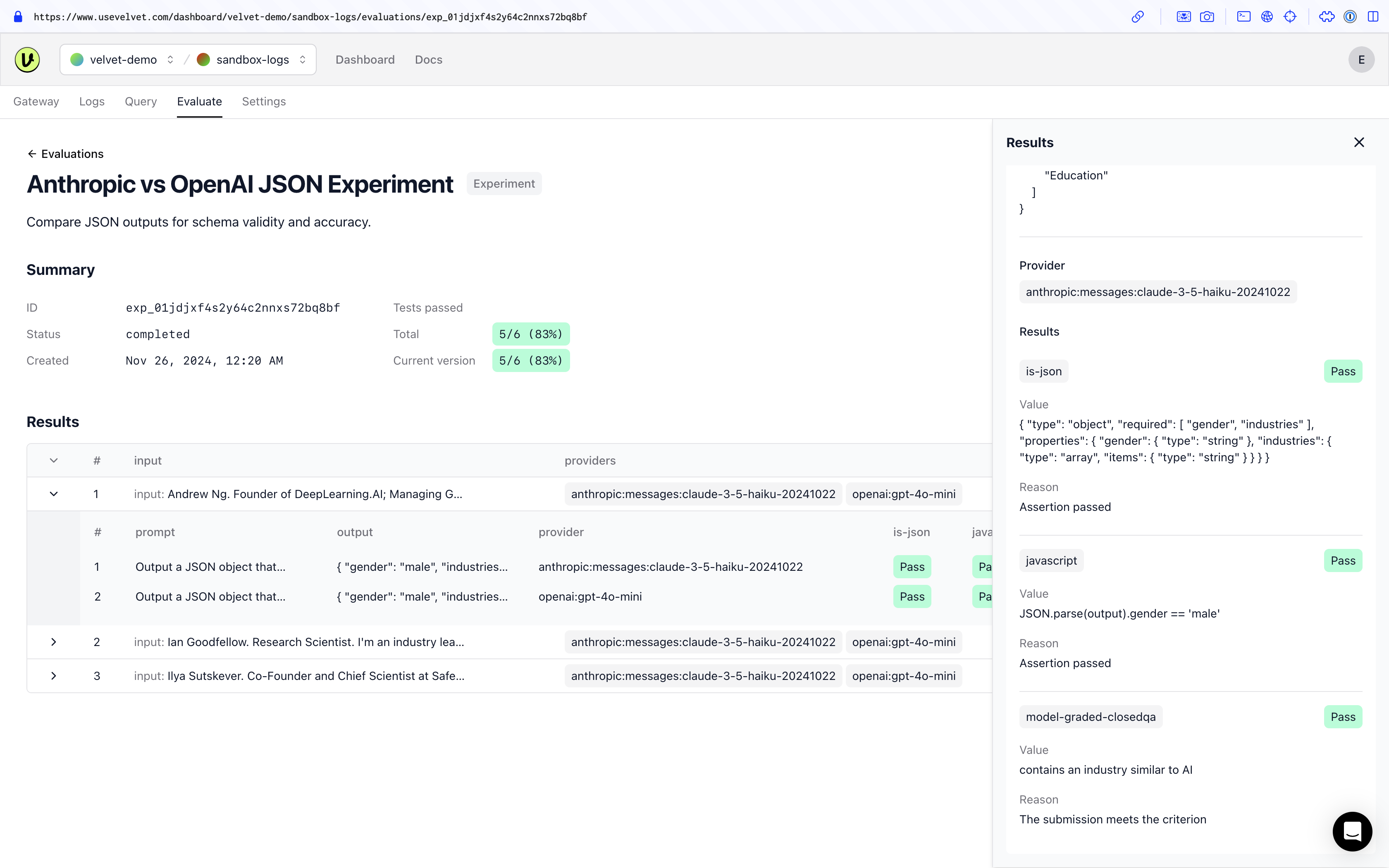

JSON evaluations

Validate JSON outputs

Trusting LLMs to output valid JSON can be tricky. They often struggle with dynamic data, inconsistent schemas, and hallucinating values. This doc outlines an approach to evaluate JSON from LLMs and ensure the outputs are both accurate and reliable.

Issues with JSON outputs

- Inconsistent Schema: JSON may be malformed or incomplete.

- Hallucination: LLMs often hallucinate numbers and values.

- Dynamic Data: LLMs struggle with adapting to dynamic or variable input data.

Evaluate JSON outputs

- Validate JSON Structure: Ensure the generated JSON adheres to a predefined schema.

- Compare Values: Assess key and value accuracy against expected criteria.

- Measure Output Relevancy: Evaluate the relevancy of JSON responses with an LLM judge.

See this JSON experiment example in the Velvet demo space.

Example

Imagine our language model outputs this JSON object:

{

"gender": "Male",

"industries": ["AI", "Software", "Big Data"]

}

To ensure fields like gender and industries are correct, we need to create assertions.

Basic JSON Validation

Use the is-json assertion to check if the output is a valid JSON:

{

"type": "is-json"

}

Schema Validation

To validate the JSON structure, define a schema:

{

"type": "is-json",

"value": {

"required": ["gender", "industries"],

"type": "object",

"properties": {

"gender": {

"type": "string"

},

"industries": {

"type": "array",

"items": {

"type": "string"

}

}

}

}

}

This schema will validate that the output is a valid JSON and includes all necessary fields with the right data types.

Compare Values

Use javascript assertions to write custom code for more advanced checks against the output values:

{

"type": "javascript",

"value": "JSON.parse(output).gender == 'male'"

}

Measure Output Relevancy with an LLM

Velvet supports LLM based assertions such as model-graded-closedqa and llm-rubric. To use it, add the transform directive to preprocess the output, then write a prompt to grade the relevancy of the value:

{

"transform": "JSON.parse(output).industries",

"type": "model-graded-closedqa",

"value": "contains an industry similar to AI"

}

Evaluation Configuration

Here's the complete example configuration for evaluating LLMs with JSON:

{

"name": "JSON evaluation",

"description": "Evaluate JSON outputs for schema validity and accuracy.",

"prompts": [

"Output a JSON object that contains the keys `gender` and `industries`, describing the following person: {{input}}"

],

"providers": [

{

"id": "openai:gpt-3.5-turbo-0125",

"config": {

"response_format": { "type": "json_object" }

}

},

{

"id": "openai:gpt-4o-mini",

"config": {

"response_format": { "type": "json_object" }

}

}

],

"tests": [

{

"vars": {

"input": [

"Andrew Ng. Founder of DeepLearning.AI; Managing General Partner of AI Fund; Exec Chairman of Landing AI.",

"Ian Goodfellow. Research Scientist. I'm an industry leader in machine learning.",

"Ilya Sutskever. Co-Founder and Chief Scientist at Safe Superintelligence Inc."

]

},

"assert": [

{

"type": "is-json",

"value": {

"required": ["gender", "industries"],

"type": "object",

"properties": {

"gender": {

"type": "string"

},

"industries": {

"type": "array",

"items": {

"type": "string"

}

}

}

}

},

{

"type": "javascript",

"value": "JSON.parse(output).gender == 'male'"

},

{

"transform": "JSON.parse(output).industries",

"type": "model-graded-closedqa",

"value": "contains an industry similar to AI"

}

]

}

]

}

Summary

By using structured JSON-based evaluations, including schema validation and custom assertions, you can validate that LLM outputs meet your required standards. This process will detect potentials issues and enhances the reliability of your LLM app.

Updated 2 months ago