Generate datasets

Export logs for fine-tuning or batch jobs.

Create a data set

OpenAI allows you to fine-tune gpt-4o-mini. Use our AI SQL editor to create a dataset you can use for fine-tuning and other batch processes.

Example use cases:

- Export datasets for fine-tuning models

- Select records for evaluations of different models

- Identify example sets for any batch process

Query your data set

Navigate to the AI SQL editor in your Velvet app. Write SQL to gather the relevant dataset.

Here is example SQL query for identifying logs into a single column.

-- CTE to gather all messages with appropriate roles and contents

WITH messages AS (

SELECT

id,

created_at,

jsonb_build_object('role', 'system', 'content', request->'body'->'messages'->0->>'content') AS message

FROM llm_logs

UNION ALL

SELECT

id,

created_at,

jsonb_build_object('role', 'user', 'content', request->'body'->'messages'->1->>'content') AS message

FROM llm_logs

UNION ALL

SELECT

id,

created_at,

jsonb_build_object('role', 'assistant', 'content', response->'body'->'choices'->0->'message'->>'content') AS message

FROM llm_logs

)

-- Aggregate messages for each log entry

SELECT

jsonb_build_object(

'messages', jsonb_agg(message ORDER BY id, created_at)

) AS transformed_log

FROM messages

GROUP BY id, created_at

ORDER BY created_at DESC

LIMIT 10; -- modify as needed

-- CTE to create a common filter

WITH filtered_logs AS (

SELECT *

FROM llm_logs

WHERE request->>'url' = '/chat/completions'

),

-- CTE to gather all messages with appropriate roles and contents

WITH messages AS (

SELECT

id,

created_at,

jsonb_build_object('role', 'system', 'content', request->'body'->'messages'->0->>'content') AS message

FROM filtered_logs

UNION ALL

SELECT

id,

created_at,

jsonb_build_object('role', 'user', 'content', request->'body'->'messages'->1->>'content') AS message

FROM filtered_logs

UNION ALL

SELECT

id,

created_at,

jsonb_build_object('role', 'assistant', 'content', response->'body'->'choices'->0->'message'->>'content') AS message

FROM filtered_logs

)

-- Aggregate messages for each log entry

SELECT

jsonb_build_object(

'messages', jsonb_agg(message ORDER BY id, created_at)

) AS transformed_log

FROM messages

GROUP BY id, created_at

ORDER BY created_at DESC

LIMIT 10; -- modify as needed

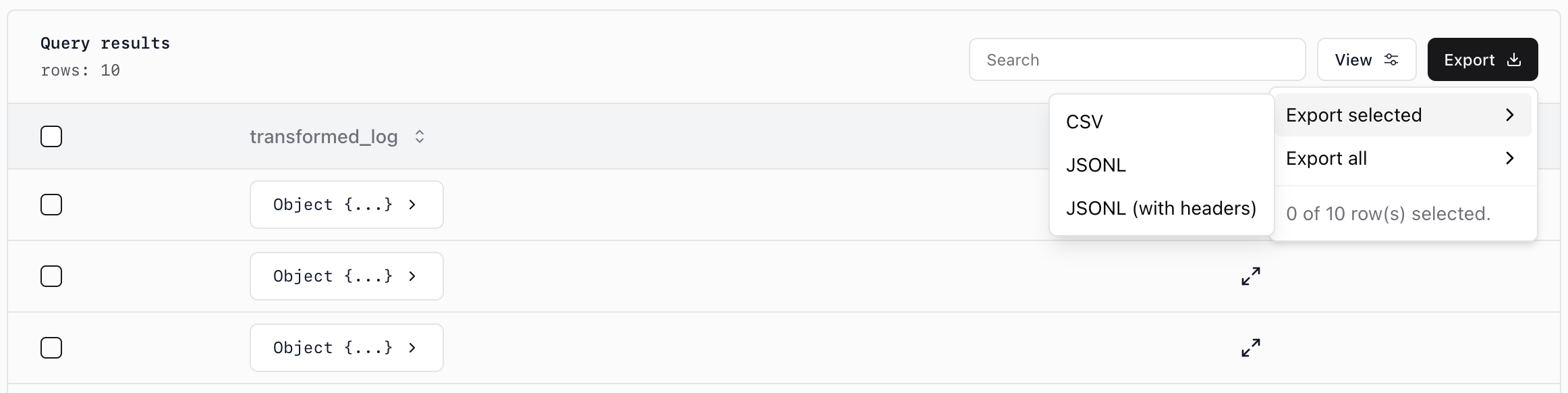

Export as JSONL

JSONLOpenAI's fine-tuning and batch endpoints need the dataset formatted as JSONL .

Once you've run the SQL query and have a result set you want to use, click the Export button in the data table. Export just selected rows, or the entire result set. Click JSONL for the export type.

You'll now have a .jsonl to upload to OpenAI or use elsewhere.

Updated 4 months ago